Chris N. H. Street

Behavioral and Social Sciences Department, University of Huddersfield, UK

(cc) Meg Lauber.

We are very inaccurate lie detectors, and tend to believe what others tell us is the truth more often than we ought to. In fact, studies on lie detection typically describe our tendency to believe others as an error in judgment. Although people may look like hopeless lie detectors, the Adaptive Lie Detector theory (ALIED) claims that people are actually making smart, informed judgments. This article explores the ALIED theory and what it means for those wanting to spot a liar.

We believe people are telling the truth more often than they actually are, called the truth bias (Bond & DePaulo, 2006). According to the Spinozan theory of the mind (Gilbert, Krull & Malone, 1990), we cannot help it. The theory claims that comprehension is the same thing as believing the information is true. It is automatic and uncontrollable, and means that sometimes we will incorrectly believe lies to be truths. Only with extra effort or time can we relabel information and believe it to be false. So, when people have limited resources (and so cannot relabel the information to be false), they should show a truth bias – and this is what has been found (Gilbert et al., 1990).

But the recently proposed Adaptive Lie Detector theory (ALIED: Street, 2015) argues that people are not lacking control over some automatic bias. Instead, people are making smart, informed judgments. ALIED claims that if there are reliable clues to whether someone is lying or not, people use those clues. But in their absence, people make an ‘educated guess’ based on their understanding of the situation (Figure 1). The informed guess is the cause of the bias.

Figure 1.- Probability of using a given behavior or contextual information to predict a lie.

ALIED’s first claim is that people use reliable indicators when they are available. In one study, people were given an incentive: either lie and receive $10, or tell the truth and watch the clock for 15 minutes (Bond, Howard, Hutchison & Masip, 2013). Unsurprisingly, everyone took the $10. So knowing if someone took the $10 is a perfect predictor of deception. A new set of participants who were told how the speakers were incentivised (to lie for $10) achieved near-perfect lie detection accuracy. With good clues, people are not biased to simply take what others say at face value.

But perfect clues rarely exist in the real world. In fact, verbal and nonverbal behaviours are very poor indicators of deception (DePaulo et al., 2003). Liars and truth-tellers look and sound pretty much the same. So how do we judge if someone is lying or not? We could guess randomly. But why do that when we have a wealth of past experience to draw from? ALIED (Street, 2015) claims this is precisely what we do. Because people usually tell the truth (Halevy, Shalvi & Verschuere, 2013), it makes sense, lacking other information, to guess that this speaker is telling the truth. And this, ALIED claims, is what causes the truth bias.

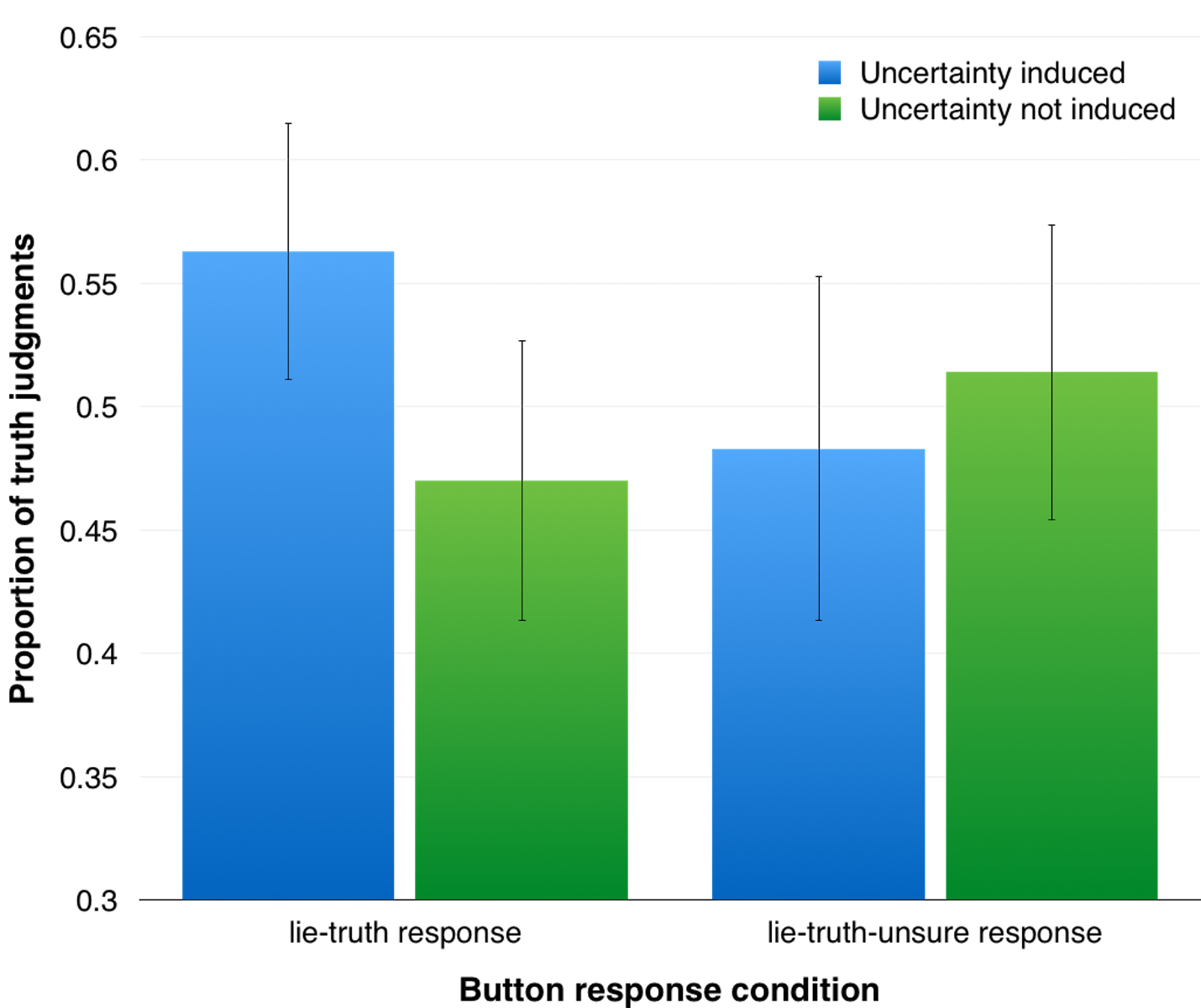

To study this, we have instilled uncertainty in our participants either by limiting the amount of information that participants have (Street & Masip, 2015; Street & Richardson, 2015a), or interrupting participants’ thinking (Street & Kingstone, 2016). Under this uncertainty, people do indeed show a truth bias, as Spinozan theory predicts (Gilbert et al., 1990). But they only show this bias if they are forced to guess. Participants given the option to say they were unsure showed no truth bias (Street & Kingstone, 2016; Street & Richardson, 2015a; see Figure 2). The truth bias comes about because people are uncertain but have to guess.

Figure 2.- Proportion of assertions judged as “true” when the participants are forced to choose versus when they have the possibility of not choosing.

Is this guess really an informed guess? Guessing that most people are telling the truth would be a poor guess if there is reason to think that most people will lie. In one study, we told participants either that most of the speakers would lie or that most would tell the truth (Street & Richardson, 2015b). When there was little information available, participants who believed there would be mostly truth-tellers showed a truth bias. But when they thought there would be mostly liars, they were biased to judge statements as lies (Street & Richardson, 2015b). It would seem that people guess when unsure – a guess based on their current understanding of the situation.

In a recent study, participants learnt that some clues to deception were very useful, while others were particularly poor (Street, Bischof, Vadillo, & Kingstone, 2015). After learning this, we gave some context information: They were either told that most speakers lied, or that most told the truth. Participants did not use context information when good clues were available. But when clues were poor indicators, participants relied on the context information to make a guess. People adapt to the situation, and use the best information available to make their judgment.

ALIED (Street, 2015) offers two routes to improving lie detection. First, create reliable clues to deception by, for example, asking them to report as many details as possible that can be verified through documents or eye witnesses (Nahari, Vrij & Fisher, 2013). And second, decrease error by preventing people from guessing when unsure. When participants are forced to guess, they show a bias. But when able to abstain, they do so and the bias disappears (Street & Kingstone, 2016). Are there certain people who are better able to judge when they should abstain? Are some people more aware of the accuracy of their own judgments? Our lab (www.conflictlab.org) is currently working in this direction.

References

Bond, C. F., & DePaulo, B. M. (2006). Accuracy of deception judgments. Personality and Social Psychology Review, 10, 214-234.

Bond, C. F., Howard, A. R., Hutchison, J. L., & Masip, J. (2013). Overlooking the obvious: Incentives to lie. Basic and Applied Social Psychology, 35, 212-221.

DePaulo, B. M., Lindsay, J. J., Malone, B. E., Muhlenbruck, L., Charlton, K., & Cooper, H. (2003). Cues to deception. Psychological Bulletin, 129, 74-118.

Gilbert, D. T., Krull, D. S., & Malone, P. S. (1990). Unbelieving the unbelievable: Some problems in the rejection of false information. Journal of Personality and Social Psychology, 59, 601-613.

Halevy, R., Shalvi, S., & Verschuere, B. (2013). Being honest about dishonesty: Correlating self-reports and actual lying. Human Communication Research, 40, 54-72.

Nahari, G., Vrij, A., & Fisher, R. P. (2013). The verifiability approach: Countermeasures facilitate its ability to discriminate between truths and lies. Applied Cognitive Psychology, 28, 122-128.

Street, C. N. H. (2015). ALIED: Humans as adaptive lie detectors. Journal of Applied Research in Memory and Cognition, 4, 335-343.

Street, C. N. H., Bischof, W. F., Vadillo, M. A., & Kingstone, A. (2015). Inferring others’ hidden thoughts: Smart guesses in a low diagnostic world. Journal of Behavioral Decision Making. Advance online publication. doi: 10.1002/bdm.1904.

Street, C. N. H., & Kingstone, A. (2016). Aligning Spinoza with Descartes: An informed Cartesian account of the truth bias. Manuscript under review.

Street, C. N. H., & Masip, J. (2015). The source of the truth bias: Heuristic processing? Scandinavian Journal of Psychology, 56, 254-263.

Street, C. N. H., & Richardson, D. C. (2015a). Descartes versus Spinoza: Truth, uncertainty, and bias. Social Cognition, 33, 227-239.

Street, C. N. H., & Richardson, D. C. (2015b). Lies, damn lies, and expectations: How base rates inform lie-truth judgments. Applied Cognitive Psychology, 29, 149-155.

Manuscript received on Marc, 30th, 2016.

Accepted on May, 23rd, 2016.

This is the English version of

Street, C. N. H. (2016). ALIED: Una teoría de la detección de mentiras. Ciencia Cognitiva, 10:2, 45-48.